- 1

- 2

- Source: Multiple trace theory

The Bourne Identity (2002)

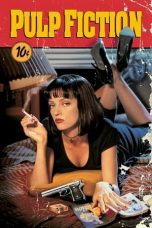

Pulp Fiction (1994)

Stream (2024)

No More Posts Available.

No more pages to load.

In psychology, multiple trace theory is a memory consolidation model advanced as an alternative model to strength theory. It posits that each time some information is presented to a person, it is neurally encoded in a unique memory trace composed of a combination of its attributes. Further support for this theory came in the 1960s from empirical findings that people could remember specific attributes about an object without remembering the object itself. The mode in which the information is presented and subsequently encoded can be flexibly incorporated into the model. This memory trace is unique from all others resembling it due to differences in some aspects of the item's attributes, and all memory traces incorporated since birth are combined into a multiple-trace representation in the brain. In memory research, a mathematical formulation of this theory can successfully explain empirical phenomena observed in recognition and recall tasks.

Attributes

The attributes an item possesses form its trace and can fall into many categories. When an item is committed to memory, information from each of these attributional categories is encoded into the item's trace. There may be a kind of semantic categorization at play, whereby an individual trace is incorporated into overarching concepts of an object. For example, when a person sees a pigeon, a trace is added to the "pigeon" cluster of traces within his or her mind. This new "pigeon" trace, while distinguishable and divisible from other instances of pigeons that the person may have seen within his or her life, serves to support the more general and overarching concept of a pigeon.

= Physical

=Physical attributes of an item encode information about physical properties of a presented item. For a word, this could include color, font, spelling, and size, while for a picture, the equivalent aspects could be shapes and colors of objects. It has been shown experimentally that people who are unable to recall an individual word can sometimes recall the first or last letter or even rhyming words, all aspects encoded in the physical orthography of a word's trace. Even when an item is not presented visually, when encoded, it may have some physical aspects based on a visual representation of the item.

= Contextual

=Contextual attributes are a broad class of attributes that define the internal and external features that are simultaneous with presentation of the item. Internal context is a sense of the internal network that a trace evokes. This may range from aspects of an individual's mood to other semantic associations the presentation of the word evokes. On the other hand, external context encodes information about the spatial and temporal aspects as information is being presented. This may reflect time of day or weather, for example. Spatial attributes can refer both to physical environment and imagined environment. The method of loci, a mnemonic strategy incorporating an imagined spatial position, assigns relative spatial positions to different items memorized and then "walking through" these assigned positions to remember the items.

= Modal

=Modality attributes possess information as to the method by which an item was presented. The most frequent types of modalities in an experimental setting are auditory and visual. Any sensory modality may be utilized practically.

= Classifying

=These attributes refer to the categorization of items presented. Items that fit into the same categories will have the same class attributes. For example, if the item "touchdown" were presented, it would evoke the overarching concept of "football" or perhaps, more generally, "sports", and it would likely share class attributes with "endzone" and other elements that fit into the same concept. A single item may fit into different concepts at the time it is presented depending on other attributes of the item, like context. For example, the word "star" might fall into the class of astronomy after visiting a space museum or a class with words like "celebrity" or "famous" after seeing a movie.

Mathematical formulation

The mathematical formulation of traces allows for a model of memory as an ever-growing matrix that is continuously receiving and incorporating information in the form of a vectors of attributes. Multiple trace theory states that every item ever encoded, from birth to death, will exist in this matrix as multiple traces. This is done by giving every possible attribute some numerical value to classify it as it is encoded, so each encoded memory will have a unique set of numerical attributes.

= Matrix definition of traces

=By assigning numerical values to all possible attributes, it is convenient to construct a column vector representation of each encoded item. This vector representation can also be fed into computational models of the brain like neural networks, which take as inputs vectorial "memories" and simulate their biological encoding through neurons.

Formally, one can denote an encoded memory by numerical assignments to all of its possible attributes. If two items are perceived to have the same color or experienced in the same context, the numbers denoting their color and contextual attributes, respectively, will be relatively close. Suppose we encode a total of L attributes anytime we see an object. Then, when a memory is encoded, it can be written as m1 with L total numerical entries in a column vector:

m

1

=

[

m

1

(

1

)

m

1

(

2

)

m

1

(

3

)

⋮

m

1

(

L

)

]

{\displaystyle \mathbf {m_{1}} ={\begin{bmatrix}m_{1}(1)\\m_{1}(2)\\m_{1}(3)\\\vdots \\m_{1}(L)\end{bmatrix}}}

.

A subset of the L attributes will be devoted to contextual attributes, a subset to physical attributes, and so on. One underlying assumption of multiple trace theory is that, when we construct multiple memories, we organize the attributes in the same order. Thus, we can similarly define vectors m2, m3, ..., mn to account for n total encoded memories. Multiple trace theory states that these memories come together in our brain to form a memory matrix from the simple concatenation of the individual memories:

M

=

[

m

1

m

2

m

3

⋯

m

n

]

=

[

m

1

(

1

)

m

2

(

1

)

m

3

(

1

)

⋯

m

n

(

1

)

m

1

(

2

)

m

2

(

2

)

m

3

(

2

)

⋯

m

n

(

2

)

⋮

⋮

⋮

⋮

⋮

m

1

(

L

)

m

2

(

L

)

m

3

(

L

)

⋯

m

n

(

L

)

]

{\displaystyle \mathbf {M} ={\begin{bmatrix}\mathbf {m_{1}} &\mathbf {m_{2}} &\mathbf {m_{3}} &\cdots &\mathbf {m_{n}} \end{bmatrix}}={\begin{bmatrix}m_{1}(1)&m_{2}(1)&m_{3}(1)&\cdots &m_{n}(1)\\m_{1}(2)&m_{2}(2)&m_{3}(2)&\cdots &m_{n}(2)\\\vdots &\vdots &\vdots &\vdots &\vdots \\m_{1}(L)&m_{2}(L)&m_{3}(L)&\cdots &m_{n}(L)\end{bmatrix}}}

.

For L total attributes and n total memories, M will have L rows and n columns. Note that, although the n traces are combined into a large memory matrix, each trace is individually accessible as a column in this matrix.

In this formulation, the n different memories are made to be more or less independent of each other. However, items presented in some setting together will become tangentially associated by the similarity of their context vectors. If multiple items are made associated with each other and intentionally encoded in that manner, say an item a and an item b, then the memory for these two can be constructed, with each having k attributes as follows:

m

a

b

=

[

a

(

1

)

a

(

2

)

⋮

a

(

k

)

b

(

1

)

b

(

2

)

⋮

b

(

k

)

]

=

[

a

b

]

{\displaystyle \mathbf {m_{ab}} ={\begin{bmatrix}a(1)\\a(2)\\\vdots \\a(k)\\b(1)\\b(2)\\\vdots \\b(k)\end{bmatrix}}={\begin{bmatrix}\mathbf {a} \\\mathbf {b} \end{bmatrix}}}

.

= Context as a stochastic vector

=When items are learned one after another, it is tempting to say that they are learned in the same temporal context. However, in reality, there are subtle variations in context. Hence, contextual attributes are often considered to be changing over time as modeled by a stochastic process. Considering a vector of only r total context attributes ti that represents the context of memory mi, the context of the next-encoded memory is given by ti+1:

t

i

+

1

(

j

)

=

t

i

(

j

)

+

ϵ

(

j

)

{\displaystyle \mathbf {t_{i+1}(j)} =\mathbf {t_{i}(j)+\epsilon (j)} }

so,

t

i

+

1

=

[

t

i

(

1

)

+

ϵ

(

1

)

t

i

(

2

)

+

ϵ

(

2

)

⋮

t

i

(

r

)

+

ϵ

(

r

)

]

{\displaystyle \mathbf {t_{i+1}} ={\begin{bmatrix}t_{i}(1)+\epsilon (1)\\t_{i}(2)+\epsilon (2)\\\vdots \\t_{i}(r)+\epsilon (r)\end{bmatrix}}}

Here, ε(j) is a random number sampled from a Gaussian distribution.

= Summed similarity

=As explained in the subsequent section, the hallmark of multiple trace theory is an ability to compare some probe item to the pre-existing matrix of encoded memories. This simulates the memory search process, whereby we can determine whether we have ever seen the probe before as in recognition tasks or whether the probe gives rise to another previously encoded memory as in cued recall.

First, the probe p is encoded as an attribute vector. Continuing with the preceding example of the memory matrix M, the probe will have L entries:

p

=

[

p

(

1

)

p

(

2

)

⋮

p

(

L

)

]

{\displaystyle \mathbf {p} ={\begin{bmatrix}p(1)\\p(2)\\\vdots \\p(L)\end{bmatrix}}}

.

This p is then compared one by one to all pre-existing memories (trace) in M by determining the Euclidean distance between p and each mi:

‖

p

−

m

i

‖

=

∑

j

=

1

L

(

p

(

j

)

−

m

i

(

j

)

)

2

{\displaystyle \left\Vert \mathbf {p-m_{i}} \right\|={\sqrt {\sum _{j=1}^{L}(p(j)-m_{i}(j))^{2}}}}

.

Due to the stochastic nature of context, it is almost never the case in multiple trace theory that a probe item exactly matches an encoded memory. Still, high similarity between p and mi is indicated by a small Euclidean distance. Hence, another operation must be performed on the distance that leads to very low similarity for great distance and very high similarity for small distance. A linear operation does not eliminate low-similarity items harshly enough. Intuitively, an exponential decay model seems most suitable:

s

i

m

i

l

a

r

i

t

y

(

p

,

m

i

)

=

e

−

τ

‖

p

−

m

i

‖

{\displaystyle similarity(\mathbf {p,m_{i}} )=e^{-\tau \left\Vert \mathbf {p-m_{i}} \right\|}}

where τ is a decay parameter that can be experimentally assigned. We can go on to then define similarity to the entire memory matrix by a summed similarity SS(p,M) between the probe p and the memory matrix M:

S

S

(

p

,

M

)

=

∑

i

=

1

n

e

−

τ

‖

p

−

m

i

‖

=

∑

i

=

1

n

e

−

τ

∑

j

=

1

L

(

p

(

j

)

−

m

i

(

j

)

)

2

{\displaystyle \mathbf {SS(p,M)} =\sum _{i=1}^{n}e^{-\tau \left\Vert \mathbf {p-m_{i}} \right\|}=\sum _{i=1}^{n}e^{-\tau {\sqrt {\sum _{j=1}^{L}(p(j)-m_{i}(j))^{2}}}}}

.

If the probe item is very similar to even one of the encoded memories, SS receives a large boost. For example, given m1 as a probe item, we will get a near 0 distance (not exactly due to context) for i=1, which will add nearly the maximal boost possible to SS. To differentiate from background similarity (there will always be some low similarity to context or a few attributes for example), SS is often compared to some arbitrary criterion. If it is higher than the criterion, then the probe is considered among those encoded. The criterion can be varied based on the nature of the task and the desire to prevent false alarms. Thus, multiple trace theory predicts that, given some cue, the brain can compare that cue to a criterion to answer questions like "has this cue been experienced before?" (recognition) or "what memory does this cue elicit?" (cued recall), which are applications of summed similarity described below.

Applications to memory phenomena

= Recognition

=Multiple trace theory fits well into the conceptual framework for recognition. Recognition requires an individual to determine whether or not they have seen an item before. For example, facial recognition is determining whether one has seen a face before. When asked this for a successfully encoded item (something that has indeed been seen before), recognition should occur with high probability. In the mathematical framework of this theory, we can model recognition of an individual probe item p by summed similarity with a criterion. We translate the test item into an attribute vector as done for the encoded memories and compared to every trace ever encountered. If summed similarity passes the criterion, we say we have seen the item before. Summed similarity is expected to be very low if the item has never been seen but relatively higher if it has due to the similarity of the probe's attributes to some memory of the memory matrix.

P

(

r

e

c

o

g

n

i

z

i

n

g

p

)

=

P

(

S

S

(

p

,

M

)

>

c

r

i

t

e

r

i

o

n

)

{\displaystyle P(recognizing~p)~=~P(\mathbf {SS(p,M)} >criterion)}

This can be applied both to individual item recognition and associative recognition for two or more items together.

= Cued recall

=The theory can also account for cued recall. Here, some cue is given that is meant to elicit an item out of memory. For example, a factual question like "Who was the first President of the United States?" is a cue to elicit the answer of "George Washington". In the "ab" framework described above, we can take all attributes present in a cue and list consider these the a item in an encoded association as we try to recall the b portion of the mab memory. In this example, attributes like "first", "President", and "United States" will be combined to form the a vector, which will have already been formulated into the mab memory whose b values encode "George Washington". Given a, there are two popular models for how we can successfully recall b:

1) We can go through and determine similarity (not summed similarity, see above for distinction) to every item in memory for the a attributes, then pick whichever memory has the highest similarity for the a. Whatever b-type attributes we are linked to gives what we recall. The mab memory gives best chance of recall since its a elements will have high similarity to the cue a. Still, since recall does not always occur, we can say that the similarity must pass a criterion for recall to occur at all. This is similar to how the IBM machine Watson operates. Here, the similarity compares only the a-type attributes of a to mab.

P

(

r

e

c

a

l

l

i

n

g

m

a

b

)

=

P

(

s

i

m

i

l

a

r

i

t

y

(

a

,

m

a

b

)

>

c

r

i

t

e

r

i

o

n

)

{\displaystyle P(recalling~m_{ab})~=~P(similarity(a,m_{ab})>criterion)}

2) We can use a probabilistic choice rule to determine probability of recalling an item as proportional to its similarity. This is akin to throwing a dart at a dartboard with bigger areas represented by larger similarities to the cue item. Mathematically speaking, given the cue a, the probability of recalling the desired memory mab is:

P

(

r

e

c

a

l

l

i

n

g

m

a

b

)

=

s

i

m

i

l

a

r

i

t

y

(

a

,

m

a

b

)

S

S

(

a

,

M

)

+

e

r

r

o

r

{\displaystyle P(recalling~m_{ab})~=~{\frac {similarity(a,m_{ab})}{SS(a,M)+error}}}

In computing both similarity and summed similarity, we only consider relations among a-type attributes. We add the error term because without it, the probability of recalling any memory in M will be 1, but there are certainly times when recall does not occur at all.

= Other common results explained

=Phenomena in memory associated with repetition, word frequency, recency, forgetting, and contiguity, among others, can be easily explained in the realm of multiple trace theory. Memory is known to improve with repeated exposure to items. For example, hearing a word several times in a list will improve recognition and recall of that word later on. This is because repeated exposure simply adds the memory into the ever-growing memory matrix, so summed similarity for this memory will be larger and thus more likely to pass the criterion.

In recognition, very common words are harder to recognize as part of a memorized list, when tested, than rare words. This is known as the word frequency effect and can be explained by multiple trace theory as well. For common words, summed similarity will be relatively high, whether the word was seen in the list or not, because it is likely that the word has been encountered and encoded in the memory matrix several times throughout life. Thus, the brain typically selects a higher criterion in determining whether common words are part of a list, making them harder to successfully select. However, rarer words are typically encountered less throughout life and so their presence in the memory matrix is limited. Hence, low overall summed similarity will lead to a more lax criterion. If the word was present in the list, high context similarity at time of test and other attribute similarity will lead to enough boost in summed similarity to excel past criterion and thus recognize the rare word successfully.

Recency in the serial position effect can be explained because more recent memories encoded will share a temporal context most similar to the present context, as the stochastic nature of time will not have had as pronounced an effect. Thus, context similarity will be high for recently encoded items, so overall similarity will be relatively higher for these items as well. The stochastic contextual drift is also thought to account for forgetting because the context in which a memory was encoded is lost over time, so summed similarity for an item only presented in that context will decrease over time.

Finally, empirical data have shown a contiguity effect, whereby items that are presented together temporally, even though they may not be encoded as a single memory as in the "ab" paradigm described above, are more likely to be remembered together. This can be considered a result of low contextual drift between items remembered together, so the contextual similarity between two items presented together is high.

Shortcomings

One of the biggest shortcomings of multiple trace theory is the requirement of some item with which to compare the memory matrix when determining successful encoding. As mentioned above, this works quite well in recognition and cued recall, but there is a glaring inability to incorporate free recall into the model. Free recall requires an individual to freely remember some list of items. Although the very act of asking to recall may act as a cue that can then elicit cued recall techniques, it is unlikely that the cue is unique enough to reach a summed similarity criterion or to otherwise achieve a high probability of recall.

Another major issue lies in translating the model to biological relevance. It is hard to imagine that the brain has unlimited capacity to keep track of such a large matrix of memories and continue expanding it with every item with which it has ever been presented. Furthermore, searching through this matrix is an exhaustive process that would not be relevant on biological time scales.

See also

Engram (neuropsychology)

Hierarchical temporal memory

Sparse distributed memory