- Statistika

- Eksperimen semu

- Variabel acak

- Efek pengacau

- Model generatif

- Statistika matematika

- Uji t Student

- Distribusi t Student

- Ilmu aktuaria

- Partial autocorrelation function

- Box–Jenkins method

- Autocorrelation

- Autoregressive integrated moving average

- Boolean function

- Partial correlation

- List of statistics articles

- Correlogram

- Moving-average model

- PACF

- Partial autocorrelation function - Wikipedia

- Autocorrelation and Partial Autocorrelation in Time Series Data

- Partial Autocorrelation Function, PACF - University of Toronto

- A Gentle Introduction to Autocorrelation and Partial Autocorrelation

- Understanding Partial Autocorrelation Functions (PACF) in Time …

- Understanding Autocorrelation and Partial Autocorrelation Functions ...

- Autocorrelation and Partial Autocorrelation - GeeksforGeeks

- 2.2 Partial Autocorrelation Function (PACF) | STAT 510

- Partial Autocorrelation Function in R - GeeksforGeeks

- Partial Autocorrelation (PACF) | Real Statistics Using Excel

Jurassic World (2015)

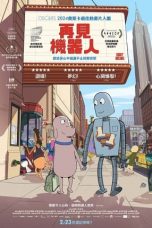

Robot Dreams (2023)

Partial autocorrelation function GudangMovies21 Rebahinxxi LK21

In time series analysis, the partial autocorrelation function (PACF) gives the partial correlation of a stationary time series with its own lagged values, regressed the values of the time series at all shorter lags. It contrasts with the autocorrelation function, which does not control for other lags.

This function plays an important role in data analysis aimed at identifying the extent of the lag in an autoregressive (AR) model. The use of this function was introduced as part of the Box–Jenkins approach to time series modelling, whereby plotting the partial autocorrelative functions one could determine the appropriate lags p in an AR (p) model or in an extended ARIMA (p,d,q) model.

Definition

Given a time series

z

t

{\displaystyle z_{t}}

, the partial autocorrelation of lag

k

{\displaystyle k}

, denoted

ϕ

k

,

k

{\displaystyle \phi _{k,k}}

, is the autocorrelation between

z

t

{\displaystyle z_{t}}

and

z

t

+

k

{\displaystyle z_{t+k}}

with the linear dependence of

z

t

{\displaystyle z_{t}}

on

z

t

+

1

{\displaystyle z_{t+1}}

through

z

t

+

k

−

1

{\displaystyle z_{t+k-1}}

removed. Equivalently, it is the autocorrelation between

z

t

{\displaystyle z_{t}}

and

z

t

+

k

{\displaystyle z_{t+k}}

that is not accounted for by lags

1

{\displaystyle 1}

through

k

−

1

{\displaystyle k-1}

, inclusive.

ϕ

1

,

1

=

corr

(

z

t

+

1

,

z

t

)

,

for

k

=

1

,

{\displaystyle \phi _{1,1}=\operatorname {corr} (z_{t+1},z_{t}),{\text{ for }}k=1,}

ϕ

k

,

k

=

corr

(

z

t

+

k

−

z

^

t

+

k

,

z

t

−

z

^

t

)

,

for

k

≥

2

,

{\displaystyle \phi _{k,k}=\operatorname {corr} (z_{t+k}-{\hat {z}}_{t+k},\,z_{t}-{\hat {z}}_{t}),{\text{ for }}k\geq 2,}

where

z

^

t

+

k

{\displaystyle {\hat {z}}_{t+k}}

and

z

^

t

{\displaystyle {\hat {z}}_{t}}

are linear combinations of

{

z

t

+

1

,

z

t

+

2

,

.

.

.

,

z

t

+

k

−

1

}

{\displaystyle \{z_{t+1},z_{t+2},...,z_{t+k-1}\}}

that minimize the mean squared error of

z

t

+

k

{\displaystyle z_{t+k}}

and

z

t

{\displaystyle z_{t}}

respectively. For stationary processes, the coefficients in

z

^

t

+

k

{\displaystyle {\hat {z}}_{t+k}}

and

z

^

t

{\displaystyle {\hat {z}}_{t}}

are the same, but reversed:

z

^

t

+

k

=

β

1

z

t

+

k

−

1

+

⋯

+

β

k

−

1

z

t

+

1

and

z

^

t

=

β

1

z

t

+

1

+

⋯

+

β

k

−

1

z

t

+

k

−

1

.

{\displaystyle {\hat {z}}_{t+k}=\beta _{1}z_{t+k-1}+\cdots +\beta _{k-1}z_{t+1}\qquad {\text{and}}\qquad {\hat {z}}_{t}=\beta _{1}z_{t+1}+\cdots +\beta _{k-1}z_{t+k-1}.}

Calculation

The theoretical partial autocorrelation function of a stationary time series can be calculated by using the Durbin–Levinson Algorithm:

ϕ

n

,

n

=

ρ

(

n

)

−

∑

k

=

1

n

−

1

ϕ

n

−

1

,

k

ρ

(

n

−

k

)

1

−

∑

k

=

1

n

−

1

ϕ

n

−

1

,

k

ρ

(

k

)

{\displaystyle \phi _{n,n}={\frac {\rho (n)-\sum _{k=1}^{n-1}\phi _{n-1,k}\rho (n-k)}{1-\sum _{k=1}^{n-1}\phi _{n-1,k}\rho (k)}}}

where

ϕ

n

,

k

=

ϕ

n

−

1

,

k

−

ϕ

n

,

n

ϕ

n

−

1

,

n

−

k

{\displaystyle \phi _{n,k}=\phi _{n-1,k}-\phi _{n,n}\phi _{n-1,n-k}}

for

1

≤

k

≤

n

−

1

{\displaystyle 1\leq k\leq n-1}

and

ρ

(

n

)

{\displaystyle \rho (n)}

is the autocorrelation function.

The formula above can be used with sample autocorrelations to find the sample partial autocorrelation function of any given time series.

Examples

The following table summarizes the partial autocorrelation function of different models:

The behavior of the partial autocorrelation function mirrors that of the autocorrelation function for autoregressive and moving-average models. For example, the partial autocorrelation function of an AR(p) series cuts off after lag p similar to the autocorrelation function of an MA(q) series with lag q. In addition, the autocorrelation function of an AR(p) process tails off just like the partial autocorrelation function of an MA(q) process.

Autoregressive model identification

Partial autocorrelation is a commonly used tool for identifying the order of an autoregressive model. As previously mentioned, the partial autocorrelation of an AR(p) process is zero at lags greater than p. If an AR model is determined to be appropriate, then the sample partial autocorrelation plot is examined to help identify the order.

The partial autocorrelation of lags greater than p for an AR(p) time series are approximately independent and normal with a mean of 0. Therefore, a confidence interval can be constructed by dividing a selected z-score by

n

{\displaystyle {\sqrt {n}}}

. Lags with partial autocorrelations outside of the confidence interval indicate that the AR model's order is likely greater than or equal to the lag. Plotting the partial autocorrelation function and drawing the lines of the confidence interval is a common way to analyze the order of an AR model. To evaluate the order, one examines the plot to find the lag after which the partial autocorrelations are all within the confidence interval. This lag is determined to likely be the AR model's order.

References

Kata Kunci Pencarian:

Autocorrelation function and partial autocorrelation function ...

Autocorrelation and Partial Autocorrelation Functions of AR(1) Process ...

Autocorrelation function and partial autocorrelation function for ...

5: Sample partial autocorrelation function. Left panel: sample partial ...

Autocorrelation function and partial autocorrelation function of ...

Autocorrelation function and Partial autocorrelation function before ...

Partial Autocorrelation Function Plot | Download Scientific Diagram

Partial Autocorrelation Function Results. | Download Scientific Diagram

Partial Autocorrelation Function Results. | Download Scientific Diagram

Plot of autocorrelation function (ACF) and partial autocorrelation ...

Partial autocorrelation function diagram. | Download Scientific Diagram

Autocorrelation function and partial autocorrelation function of time ...

partial autocorrelation function

Daftar Isi

Partial autocorrelation function - Wikipedia

In time series analysis, the partial autocorrelation function (PACF) gives the partial correlation of a stationary time series with its own lagged values, regressed the values of the time series at all shorter lags.

Autocorrelation and Partial Autocorrelation in Time Series Data

May 17, 2021 · Use the autocorrelation function (ACF) to identify which lags have significant correlations, understand the patterns and properties of the time series, and then use that information to model the time series data. From the ACF, you can assess the randomness and stationarity of a time series.

Partial Autocorrelation Function, PACF - University of Toronto

For that, we will de ne and employ the partial autocorrelation function (PACF) of the time series. Consider an AR(1) process, xt = xt 1 + wt. Note that xt 1 = xt 2 + wt 1, substituting back. NO intercept is needed because the mean of xt is zero. In addition, let ^xt denote the regression of xt on fxt+1; xt+2; :::; xt+h 1g, then. j j < 1.

A Gentle Introduction to Autocorrelation and Partial Autocorrelation

Aug 14, 2020 · Partial Autocorrelation Function. A partial autocorrelation is a summary of the relationship between an observation in a time series with observations at prior time steps with the relationships of intervening observations removed.

Understanding Partial Autocorrelation Functions (PACF) in Time …

Feb 1, 2024 · Partial autocorrelation functions (PACF) play a pivotal role in time series analysis, offering crucial insights into the relationship between variables while mitigating confounding influences. In essence, PACF elucidates the direct correlation between a variable and its lagged values after removing the effects of intermediary time steps.

Understanding Autocorrelation and Partial Autocorrelation Functions ...

Jul 26, 2024 · Partial autocorrelation measures the correlation between observations at two time points, accounting for the values of the observations at all shorter lags. This helps isolate the...

Autocorrelation and Partial Autocorrelation - GeeksforGeeks

Nov 22, 2023 · Partial autocorrelation removes the influence of intermediate lags, providing a clearer picture of the direct relationship between a variable and its past values. Unlike autocorrelation, partial autocorrelation focuses on the direct correlation at each lag. The partial autocorrelation function (PACF) at lag k for a time series.

2.2 Partial Autocorrelation Function (PACF) | STAT 510

For a time series, the partial autocorrelation between \(x_{t}\) and \(x_{t-h}\) is defined as the conditional correlation between \(x_{t}\) and \(x_{t-h}\), conditional on \(x_{t-h+1}\), ... , \(x_{t-1}\), the set of observations that come between the time points \(t\) and \(t-h\).

Partial Autocorrelation Function in R - GeeksforGeeks

Oct 9, 2024 · The Partial Autocorrelation Function (PACF) is a vital tool in time series analysis, providing valuable insights into the direct relationships between past and present values. By interpreting PACF plots, analysts can make informed decisions regarding model selection and …

Partial Autocorrelation (PACF) | Real Statistics Using Excel

The partial autocorrelation function (PACF) of order k, denoted p k, of a time series, is defined in a similar manner as the last element in the following matrix divided by r 0. Here R k is the k × k matrix R k = [ s ij ] where s ij = r | i-j | and C k is the k × 1 column vector C k = [ r i ].